The dashcam footage is terrifying to watch. An Uber self-driving car hurtles down an Arizona street at night. The car’s bored-looking human operator looks down until too late. A woman wheeling a bike – Elaine Herzberg – looms out of the darkness a split second before she is killed.

In the summer of 2001 I visited Carnegie-Mellon University in Pittsburgh while researching The Reverend’s Logic. My host was Sebastian Thrun, a German-born professor who had created a field he called ‘probabilistic robotics’. His enthusiasm was infectious.

The autonomous wheeled robots he demoed for me in the lab were not given maps of their surroundings. Instead they had to make guesses about their position and speed, with a sense of conviction determined by Bayes’ theorem. They used laser rangefinders to generate evidence (in the form of position data) that their processors used to support or reject hypotheses. The technology was new but the logic was straight out of the 18th century.

Now fast-forward 17 years. After leaving CMU for Stanford, Thrun pioneered self-driving cars, and sold his robotics technology to Google via a startup he founded. He became an evangelist for self-driving cars, telling a 2011 TED conference that he was motivated by the death of his best friend in a car accident aged 18. Meanwhile, Google used Thrun’s ideas in its own self-driving car business, now called Waymo.

Then, ride sharing giant Uber decided it wanted a piece of the self-driving pie. It hired one of Thrun’s former Google colleagues, Anthony Levandowski, although Uber was forced to fire him after a judge upheld a complaint by Google. It lured dozens of Thrun’s former robotics colleagues at CMU away from academia, alongside other well-known Bayesian artificial intelligence experts such as Cambridge University professor Zoubin Gharamani (whom I also interviewed in 2001).

Uber shows no sign of slowing down its self-driving hiring spree. According to the company’s website, there are currently more than 150 open positions in its Advanced Technologies Group which houses autonomous vehicles. Using salary averages from Glassdoor, that amounts to a $14 million annual staff budget yet to be filled. (In the visualisation below, click on the nodes to expand them and scroll to the left to see all the job positions).

|

|

Both Waymo and Uber are currently testing their autonomous vehicles in Arizona, at least they were until last week when Uber’s vehicle hit and killed Herzberg in the town of Tempe. Although the local police chief said that the accident wasn’t the fault of Uber’s vehicle, the footage suggests otherwise.

With the human operator clearly distracted, the only thing that could have saved Herzberg was the vehicle’s brain. But the car doesn’t even slow down before the impact. What was Uber’s car thinking?

Now artificial intelligence has progressed in leaps and bounds since I met Thrun in 2001. Deep learning that harnesses layers of neural networks allows superior predictions to be made, achieving striking breakthroughs in games such as Go or Chess. Some commentators argue that the old AI template of Bayesian inference has outlived its usefulness and should be discarded.

In the world of self-driving cars, deep learning might involve logging thousands of hours of humans driving and using that training data to develop a library of heuristics that outperforms human drivers. But there is one aspect of autonomous transport that remains deeply Bayesian: simultaneous localisation and mapping (known as SLAM) which follows the same principles Thrun refined 20 years ago.

Even with detailed on-board maps and GPS, SLAM remains a Bayesian guessing game because of the inherent uncertainty of road environments – unmapped obstacles, other cars and of course, cyclists and pedestrians. Even with greatly improved sensors and high-definition cameras, there are situations where the interplay between an autonomous car’s belief in its location and the driving heuristic it plucks from its machine learning library will lead to bad outcomes.

In practice, that might mean the car overreacts to something that isn’t there, or underreacts to something that is – such as a lady wheeling her bike.

We can ask some questions. What kind of accident tolerance level should society accept for self-driving cars? If aggressive companies like Uber push the envelope of their self-driving algorithms to compete, how should regulators respond? Should reputable academics or universities work with such companies?

Lets leave aside questions about Arizona’s light-touch regulation of autonomous cars, and the eagerness of local police to quickly blame the victim in this case. And remember Thrun’s point that thousands of deaths occur every day across the world as a result of human drivers.

Given all that, even the best self-driving cars are going to kill people as their adoption increases. If self-driving cars do it appreciably less than humans, we might learn to live with them. But as more of these deaths happen, the intention and perceptions of autonomous vehicles deserves a lot more attention.

Levelling the Playing Field

Levelling the Playing Field

Barclays and Labour's growth plan

Barclays and Labour's growth plan

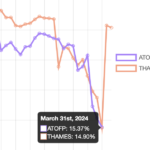

Plummeting bonds reflect souring UK mood for outsourcing and privatisation

Plummeting bonds reflect souring UK mood for outsourcing and privatisation

Dimon rolls trading dice with excess capital

Dimon rolls trading dice with excess capital