There are several reasons to read this book. A curious non-scientist will learn a lot about how today’s experts think about concepts like causation and correlation. Those with a technical or science background, who absorbed statistics as part of a university education, will find it useful to retool their thinking, which might be contaminated with out-dated concepts that this book will sweep away. And then there are those who work or invest in artificial intelligence that might benefit from a wake up call to incorporate thinking that is largely missing from their field.

I met Judea Pearl in 2001 while researching my unpublished probability book.

Pearl was an affable Israeli-American professor who had been recommended to me as a key player in Bayesian probability, particularly for its application to complex chains of inference known as Bayesian networks. These models of reasoning had emerged in the late 1980s as the best way to create intelligent agents – such as self-driving cars – that combined background knowledge with new data from their environment to make decisions.

The idea had evolved from neural networks, an earlier formalism that combined data without the superstructure of probability theory. Although Pearl modestly said “my contribution is very tiny” he was the one who added probability theory to the formalism.

During my interview with him, Pearl was almost self-effacing. He answered my questions in detail and was generous with his historical anecdotes. He had a copy of Thomas Bayes’ original 1764 paper and argued that this paper was as much about causality as making inferences from data. Bayes theorem, Pearl said, provided a window into causality that had been forgotten for 250 years.

I soon learned that there was something more to Pearl. A couple of days later in Redmond, I spoke to David Heckerman, a senior researcher at Microsoft who had qualified as a medical doctor before getting his computer science PhD. Heckerman told me that Pearl’s ideas on causality were so powerful that they could end up abolishing the need for randomised experiments – because his networks could infer the same result. “It has a potential for profound impact on society”, Heckerman said.

But then my other interviewees – who praised Pearl for his Bayesian insights – warned me off. Cambridge professor David Spiegelhalter summed up the view of mainstream Bayesian statisticians with his comment that Pearl’s causal inference was “deeply controversial. Frankly I don’t like it either”.

Seventeen years after my meeting, Bayesian networks are ubiquitous in society. From error-correcting codes in mobile phones to self-driving cars, they power the most transformational technologies around today. Yet Pearl’s causal thinking is only just getting a foothold in social science, and has largely been sidelined by the artificial intelligence community.

This book, which is co-authored by Pearl and science writer Dana MacKenzie, explains why. The resistance to causality comes from the deeply engrained – or one might say ossified – teachings of statistical founding fathers like Karl Pearson and Ronald Fisher a hundred or so years ago. We owe to them catchphrases like “correlation is not causation” which has been conveyed in stats courses to millions of undergraduates over the decades.

As Pearl recounts, early pioneers of causal thinking, such as US biologist Sewall Wright, were dismissed out of hand by the powerful cliques of statisticians these founding fathers built up around themselves. The only legitimate route to causality in science was the randomised controlled trial, invented by Fisher, now accepted as the gold standard of statistical inference.

This force of history also explains why so many of the Bayesians I spoke to seventeen years ago were wary of Pearl. Their approach of looking at probability as a degree of belief (whether for a human or AI agent) was fiercely opposed by the schools of Pearson and Fisher, who countered with a theory of probability based purely on frequencies of observed events.

Having their approach accepted as equivalent was a hard-won victory for Bayesians. It might have been easier for Pearl, with a tenured research position at UCLA, to keep pushing the foundations. Not so much for the likes of Spiegelhalter, who helped expose abnormal death rates in UK hospitals and had to defend his methods against traditionalists.

“Most statisticians, as soon as there’s any idea of learning causality from non-randomised data, they will almost universally refuse to step over that mark”, Spiegelhalter told me in a 2003 interview. “You look over your shoulder, and all your colleagues will come down on you like a ton of bricks. You could say there’s a vested interest, but the fact is that there’s a fantastic tradition of doing things with randomised trials. There is a huge industry – the pharmaceutical industry – that literally relies on it.”

However, this resistance may be on the cusp of change. In his book, Pearl puts both Bayesians and frequentists at the bottom rung of a ‘ladder of causation’. Consider a canonical example that Pearl examines in detail: whether smoking causes cancer. If you observe that smokers have a greater probability of getting cancer than non-smokers, you might argue that smoking caused cancer.

Wrong, argued the likes of Fisher, pointing out that there might be a third variable – such as an unseen ‘cancer gene’ – that was more common in smokers and also happened to cause cancer. Because you can’t see this gene you can’t control for it in the population and therefore you can’t reach any conclusions based solely on observations of smoking and cancer. Such a variable is called a ‘confounder’.

Pearl’s response is that we have climb up his ladder of causation from the bottom rung, which is only about observations, to the next level, where one is allowed to make interventions. Observing X (which might be controlled by Z) is different from doing X (blocking any influence of Z).

Armed with diagrams, or graphical models of causal mechanisms, Pearl shows how to defeat confounders such as the mythical ‘cancer gene’. RCTs are one form of intervention that emerge from his theory (and in his relaxed way he assures fans of RCTs that they can carry on using them as if his theory didn’t exist). But what about those situations where it is unethical or impractical to conduct experiments?

As Heckerman told me in 2001, Pearl solved the problem. Using the causal calculus devised by him and his students, you can indeed infer causal relationships from observations without performing experiments, stepping over the mark that Spiegelhalter refused to step across.

The book contains plenty of examples of how this works, and we can now see the broader community in public health or economics beginning to use these methods. For example, the UK Medical Research Council is now offering funding for causal research on health interventions, citing Pearl’s research as a reference.

In the book, Pearl goes further, climbing the next rung of his causation ladder to applying counterfactuals. These are ubiquitous in areas such as the law, as a way of apportioning blame or liability. Pearl shows once again how to use observational data to answer ‘would-have-been’ questions.

There’s one final chapter in the book where Pearl comes full circle back to his earlier work in computer science. He muses on why artificial intelligence has passed on using causal modelling. Advances like deep learning are flawed, he argues, because they are stuck on the bottom rung of the ladder of causation, searching for connections between data without interventions or counterfactuals. One is inclined to agree with Pearl that AI will never display human-like intelligence until it overcomes this deficiency.

Levelling the Playing Field

Levelling the Playing Field

Barclays and Labour's growth plan

Barclays and Labour's growth plan

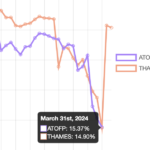

Plummeting bonds reflect souring UK mood for outsourcing and privatisation

Plummeting bonds reflect souring UK mood for outsourcing and privatisation

Dimon rolls trading dice with excess capital

Dimon rolls trading dice with excess capital