Any account of AI needs to mention Douglas Hofstadter. At roughly the half-way point between Alan Turing’s foundational work on computing and today’s large language models, Hofstadter’s bestselling book Gödel, Escher, Bach: An Eternal Golden Braid appeared. Like many other 1980s teenagers, I was captivated by ‘GEB’.

Any account of AI needs to mention Douglas Hofstadter. At roughly the half-way point between Alan Turing’s foundational work on computing and today’s large language models, Hofstadter’s bestselling book Gödel, Escher, Bach: An Eternal Golden Braid appeared. Like many other 1980s teenagers, I was captivated by ‘GEB’.

40 years after GEB’s publication, Hofstadter is upset. When a fan asked him to comment on GPT-4’s attempt to summarise his book, Hofstadter reacted furiously, slamming LLMs as ‘repellent’. In a recent podcast, he spoke of how he found the success of AI ‘traumatic’.

I suspect the reason for Hofstadter’s trauma is that his symbolic view of AI served as a kind of bulwark shielding humanity against machines. If AI involved symbolically representing the world with logic, then the famous pitfalls of logic like Gödel’s theorem or strange loops meant human-level progress was almost infinitely far away. This view turned out to be quite mistaken, to Hofstadter’s horror.

Facing up to the new reality is Melanie Mitchell, with her book Artificial Intelligence: A Guide for Thinking Humans. She was Hofstadter’s PhD student and taught herself to use neural networks, using the open-source tools that are now so readily available. She gives good, detailed explanations of the basic flavours of network and the problems they are used for, such as classification, recognition and prediction.

This gives Mitchell a credible platform to critique all these inventions. She details problems with training data biases, adversarial examples that fool the systems and she casts a beady eye on claims that a particular system has ‘beaten humans’ at some task. She captures the artisanal flavour of much machine learning research, with its adjustable ‘hyperparameters’, and the use of services such as Amazon Mechanical Turk, where low-paid humans classify the images that train AI systems.

The real meat of Mitchell’s book comes in the last chapters, where she discusses natural language processing (NLP), which is the domain of ChatGPT. She gives a good account of the key technical breakthrough of word vector encoding which drove progress in machine translation and image captioning (the book ends in 2018 before the emergence of today’s large language models or LLMs).

As with earlier machine learning breakthroughs, Mitchell highlights the flaws in these applications, which she blames on their lack of a semantic understanding or a mental model of the text they manipulate. This takes her full circle back to Hofstadter and her beloved symbolic approach which she claimed (in 2018) is out of reach of neural networks.

Levelling the Playing Field

Levelling the Playing Field

Barclays and Labour's growth plan

Barclays and Labour's growth plan

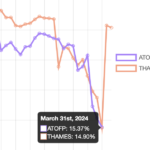

Plummeting bonds reflect souring UK mood for outsourcing and privatisation

Plummeting bonds reflect souring UK mood for outsourcing and privatisation

Dimon rolls trading dice with excess capital

Dimon rolls trading dice with excess capital